- A news coverage first

- Facial recognition and AI technology

- Integrated UX and UI for an interactive video player

- Built in under three months

- Watched by over 10 million

- Global PR coverage

When Harry and Meghan Markle got married in 2018, the world went mad for a Royal Wedding. It was a total and utter media frenzy. Sky News was looking for an opportunity to stand out, to give viewers an experience like no other. With groundbreaking UX and UI, facial recognition and the power of AI were about to seriously make headlines.

Innovation on a royal scale

Try to look past what you know now, back to 2018, when Harry was still a Prince and Meghan Markle was still that talented actor you knew from Suits – it was a year fit for an historic Royal Wedding. On Saturday, May 19th, 2018, around 29.2 million people tuned in to watch Prince Harry of Wales marry American actor Meghan Markle at Windsor Castle, England. It sparked a global media frenzy, with broadcasters fighting for the competitive edge to win viewers.

Sky News was one such broadcaster. Often at the leading edge of news journalism and technology, it approached UIC Digital to help bring a powerful user experience to viewers during the Royal Wedding. One that would bring added value to its coverage and make it stand out from the crowd.

With public intrigue around the Royal Wedding at an all-time high, the who’s who of Harry and Megan’s guestlist was the ideal opportunity to be different. Of course, scrutinising the couple’s royal wedding guests is clearly must-watch TV for the British public, so Sky News decided to develop an interactive user experience that would deliver it.

An AI-powered game-changer

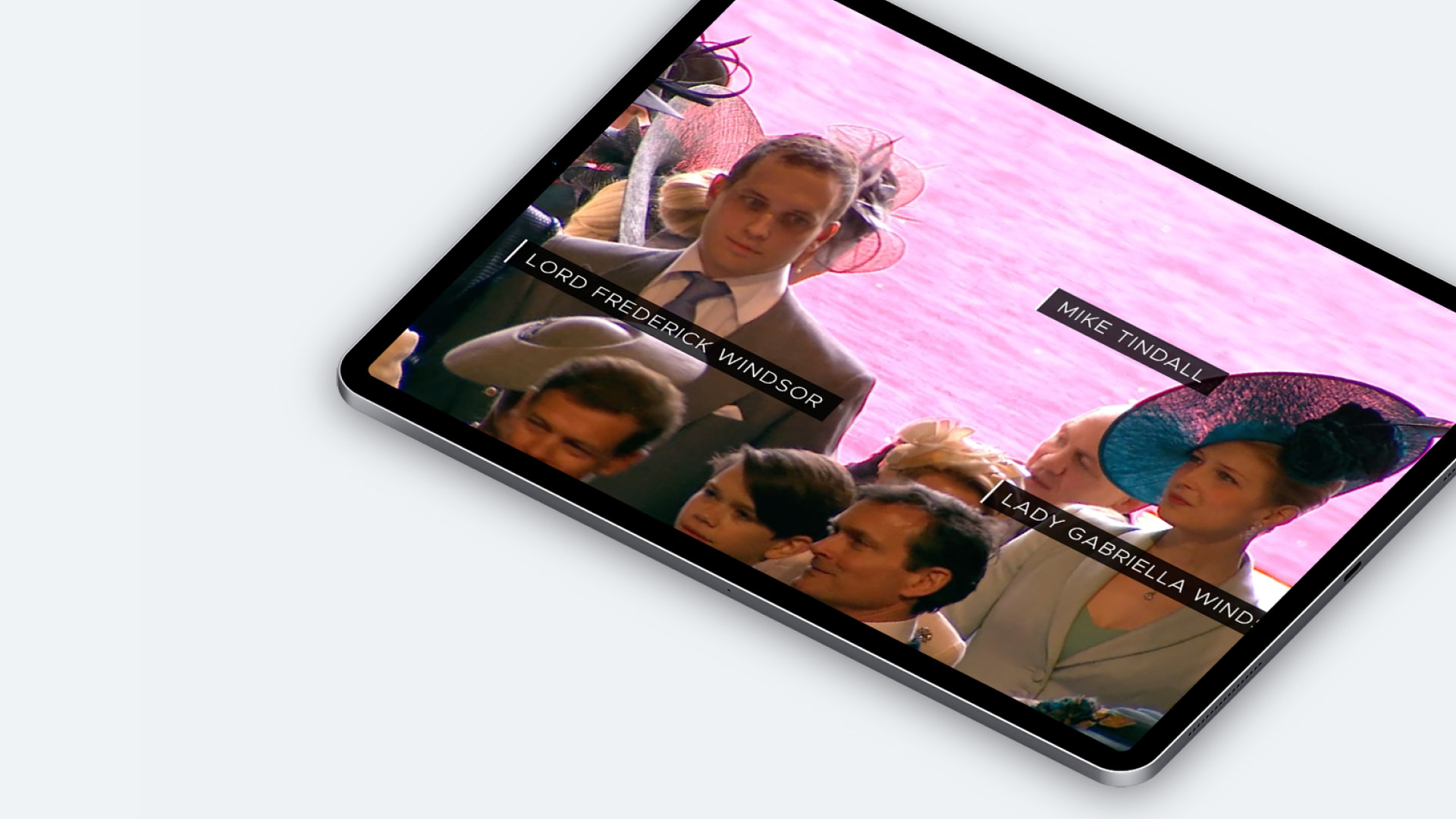

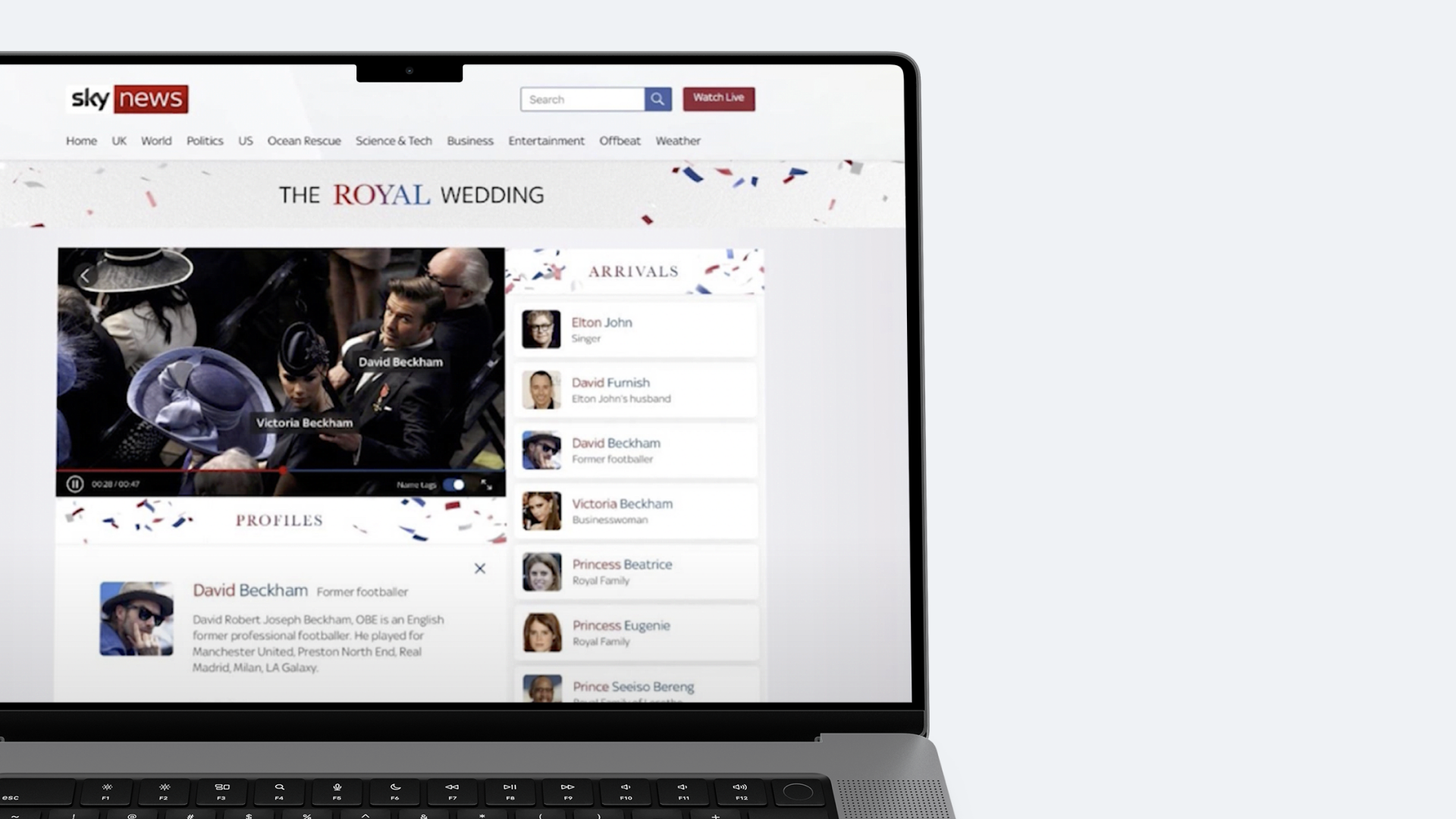

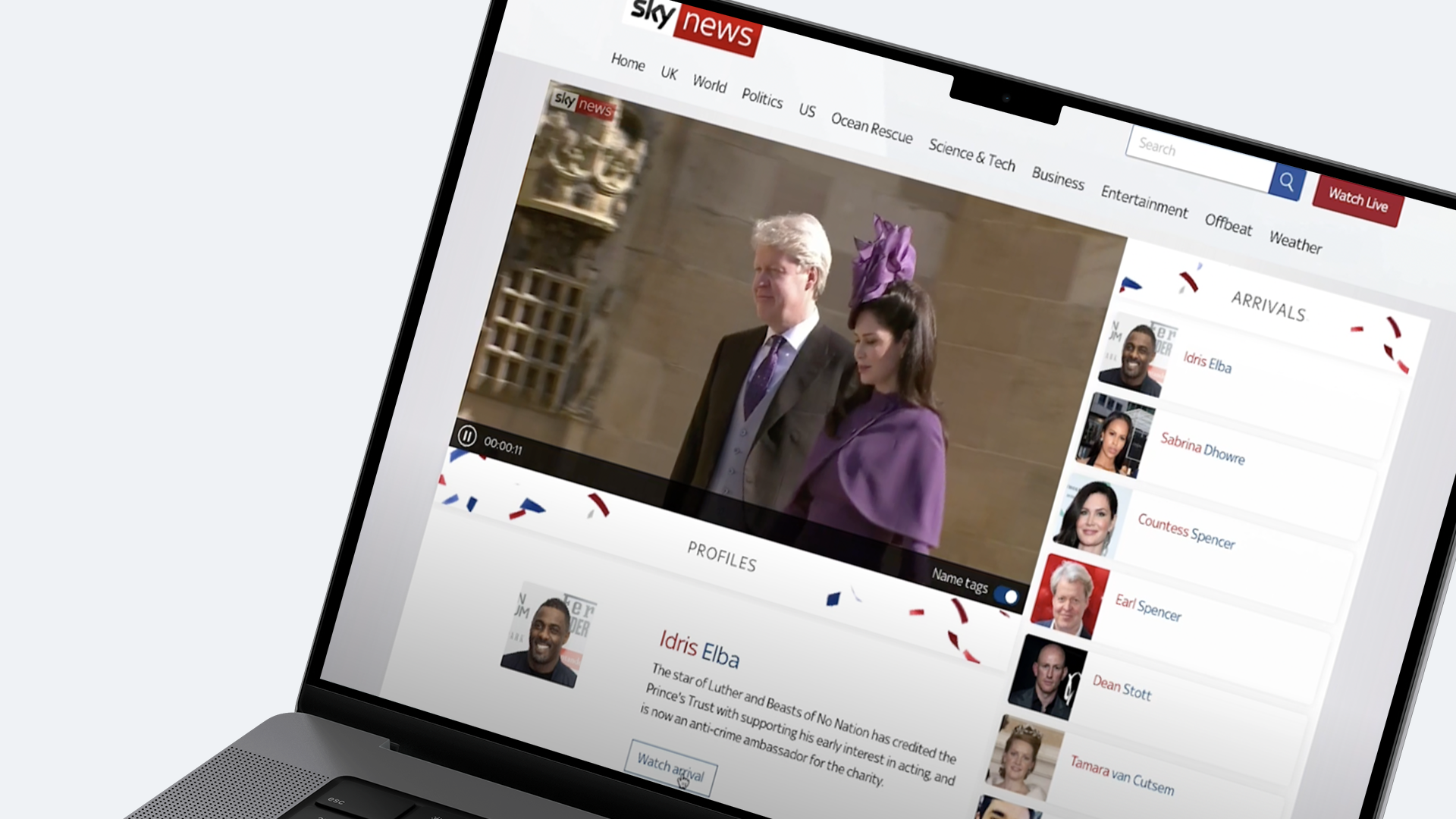

The idea was to use AI technology to power an automatic ‘who’s who’ of the crowd using facial recognition, with profiles brought up alongside live coverage, to gamify the news and gratify its audience. Working alongside AI specialists GrayMeta, we first designed the frontend single page application and video player to bring the viewing experience to life, harnessing Sky’s machine-learning workflow as we went. This involved using Angular.js, Typescript, HTML5 and SCSS Stylesheets.

Sky’s Royal Wedding ‘Who’s Who’ functionality used facial recognition to highlight public figures arriving on the day, stored as a makeshift guestlist to the right of the video player – with profiles to read about whichever you clicked. That might seem fairly standard in today’s world but, five years ago, facial recognition technology was cutting-edge. Just think about it – Apple had only just made it standard on iPhones and just about the only other place you’d see it was at the fiddly automatic passport gates through UK Border Security.

Once a celeb was spotted by our AI, it gave you details on who they were, how they knew Harry and Meghan and used on-screen captions and graphics to keep things visual. It meant viewers could search through to find their favourite celebs without needing to leave Sky’s live royal coverage for even one second. Hands on and self-guided, it was a celeb spotting session to rival any paparazzi reporter.

After the big day, Sky published a video-on-demand version of the Royal Wedding that gave viewers the option to pause and rewind, as well as follow an individual guest arrival.

No challenge too great

We had to design, build and deliver this app in only two and a half months. Not only that but no official royal guestlist was published before the event, so our facial recognition technology had to work fast and efficiently. It was tricky at best. To solve the problem, we worked alongside Sky journalists to scour social media and news articles looking for clues about who might be coming. That work resulted in a list of 800 anticipated guests – and our AI would need 10-15 facial images of each uploaded to stand a chance on the day. That’s over 8,000 images! It took us two full months to pull it together.

The functionality was built using cloud infrastructure from AWS for speed and access to scalable machine-learning. Combined with GrayMeta’s data analysis platform and our interactive UI design, Amazon’s Rekognition video and image analysis platform cherry-picked our celebs from the crowd in real-time, whilst tagged metadata put the faces to the names and got stored in the video player. Footage from an outside broadcast van near Windsor Castle’s St George’s Chapel sent a live video feed to AWS, where it was run through its AWS Elemental MediaPackage and distributed back to Sky through the Amazon CloudFront content delivery network.

A royal success

The broadcast raked in over 10 million views and over 100,000 concurrent views. It picked up worldwide PR coverage, ranging from The Observer to The Verge to The Hollywood Reporter and The Washington Post. As far as news coverage goes, it was the talk of the town.

This is just the start. Second-screen viewing, information-rich content and gamified video-on-demand is coming into a boom. Look at Sky F1 – you can ride on-board any driver on the grid whilst live coverage plays side-by-side. And, whilst the technological advances are there, UX and UI must keep up, to give viewers the best watching experience around. Which is why we’re here, as a creative engineering company you can trust to take the moments that matter most to the next level through game changing design, UX and technology.